http://www.cyberciti.biz/cloud-computing/http-status-code-206-commad-line-test

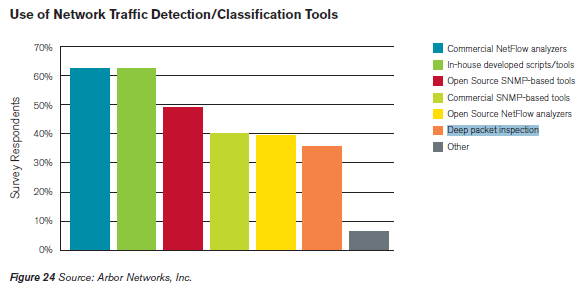

The HTTP 2xx class of status codes indicates the action requested by the client was received, and processed successfully. HTTP/1.1 200 OK is the standard response for successful HTTP requests. When you type www.cyberciti.biz in the browser you will get this status code. The HTTP/1.1 206 status code allows the client to grab only part of the resource by sending a range header. This is useful for:

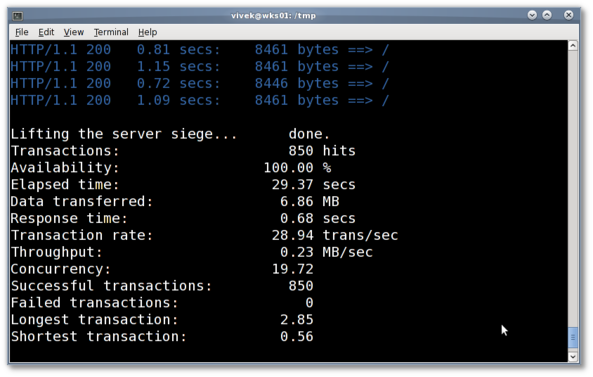

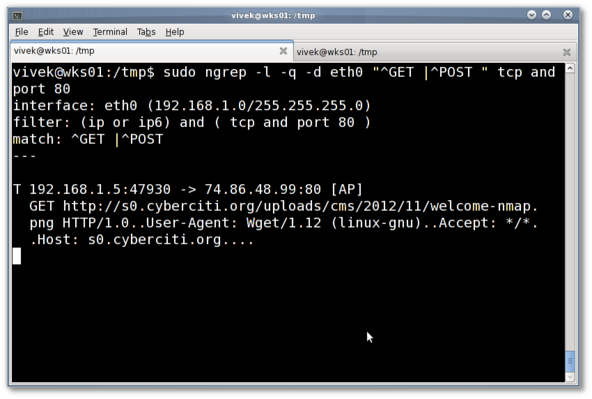

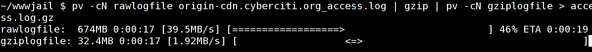

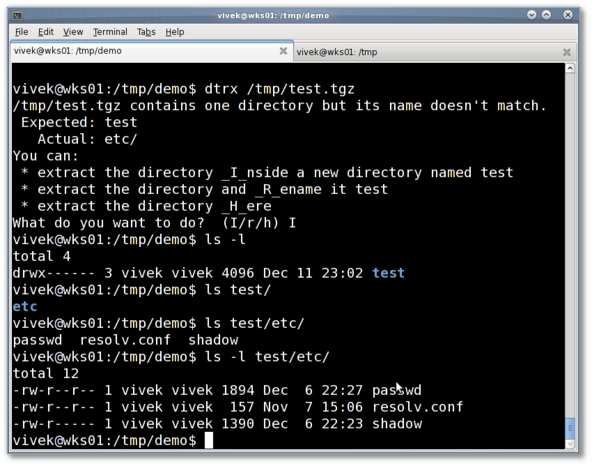

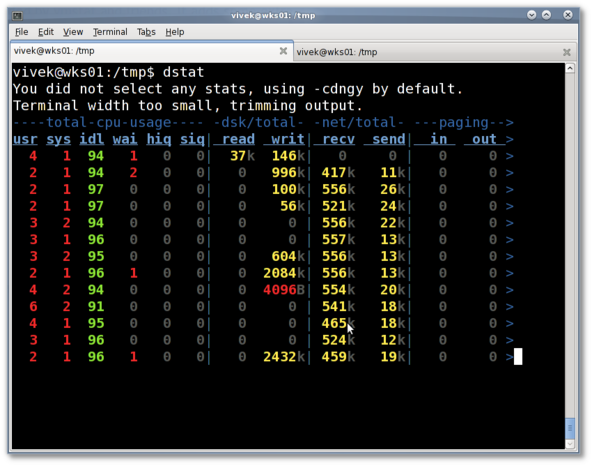

Sample outputs:

Where,

The HTTP 2xx class of status codes indicates the action requested by the client was received, and processed successfully. HTTP/1.1 200 OK is the standard response for successful HTTP requests. When you type www.cyberciti.biz in the browser you will get this status code. The HTTP/1.1 206 status code allows the client to grab only part of the resource by sending a range header. This is useful for:

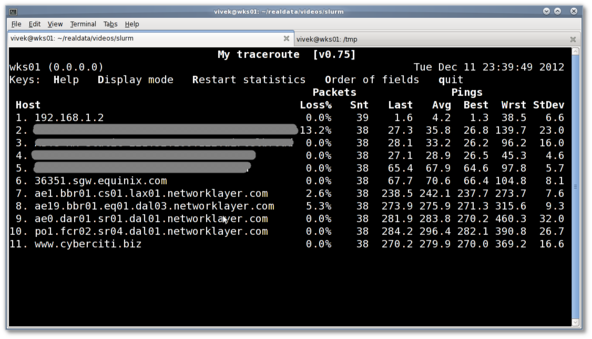

- Understanding http headers and protocol.

- Troubleshooting network problems.

- Troubleshooting large download problems.

- Troubleshooting CDN and origin HTTP server problems.

- Test resuming interrupted downloads using tools like lftp or wget or telnet.

- Test and split a large file size into multiple simultaneous streams i.e. download a large file in parts.

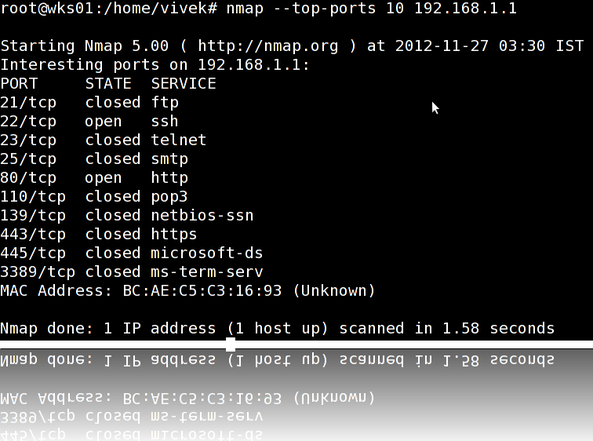

Finding out if HTTP 206 is supported or not by the remote server

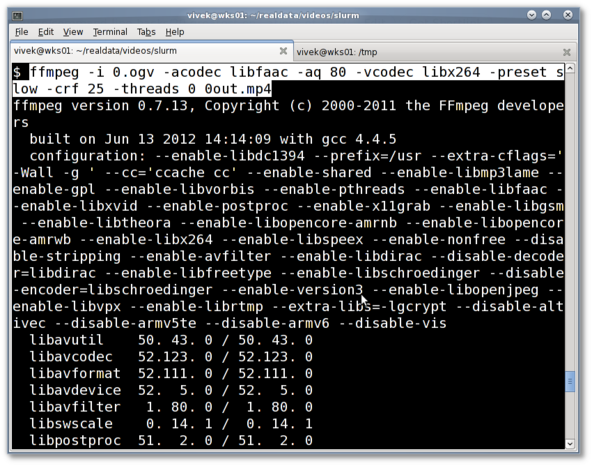

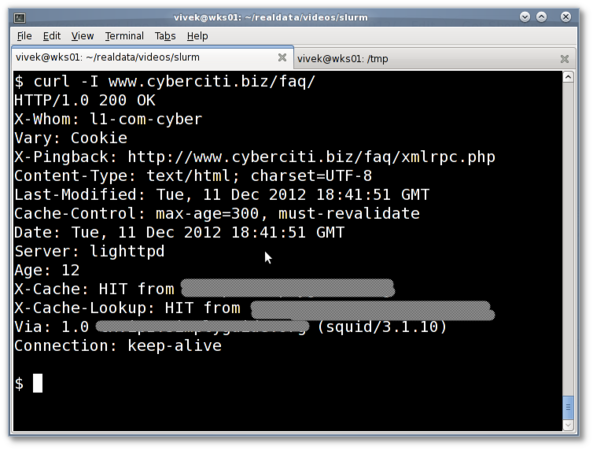

You need to find file size and whether remote server support HTTP 206 requests or not. Use the curl command to see HTTP header for any resources. Type the following curl command and send a HEAD request for the url:$ curl -I http://s0.cyberciti.org/images/misc/static/2012/11/ifdata-welcome-0.pngSample outputs:

HTTP/1.0 200 OKThe following two headers gives out information about this image file:

Content-Type: image/png

Content-Length: 36907

Connection: keep-alive

Server: nginx

Date: Wed, 07 Nov 2012 00:44:47 GMT

X-Whom: l3-com-cyber

Cache-Control: public, max-age=432000000

Expires: Fri, 17 Jul 2026 00:44:46 GMT

Accept-Ranges: bytes

ETag: "278099835"

Last-Modified: Mon, 05 Nov 2012 23:06:34 GMT

Age: 298127

- Accept-Ranges: bytes - The Accept-Ranges header indicate that the server accept range requests for a resource. The unit used by the remote web servers is in bytes. This header tell us that either server support download resume or downloading files in smaller parts simultaneously so that download manager applications can speed up download for you. The Accept-Ranges: none response header indicate that the download is not resumable.

- Content-Length: 36907 - The Content-Length header indicates the size of the entity-body i.e. the size of the actual image file is 36907 bytes (37K).

How do I pass a range header to the url?

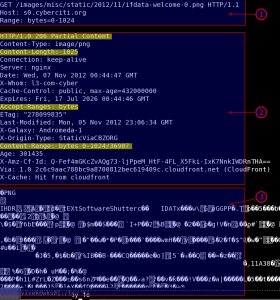

Now, you know you can make a range request to the url. You need to send GET request including a range header:The exact sequence should be as follows. First, send HTTP/1.1 GET request:

Range: bytes=0-1024

Next, send the Host request-header to specifies the Internet host and port number of the resource being requested, as obtained from the original URI given by the user or referring resource:

GET /images/misc/static/2012/11/ifdata-welcome-0.png HTTP/1.1

Finally, send Range header request that specifies the range of bytes you want:

Host: s0.cyberciti.org

Range: bytes=0-1024

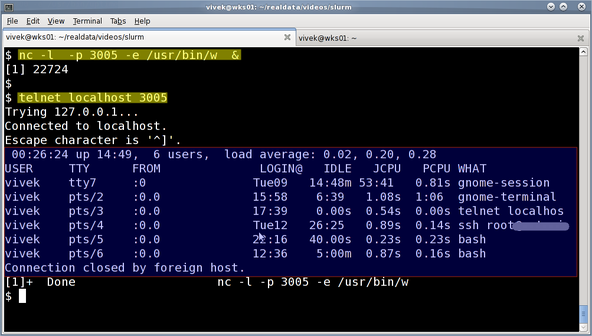

telnet command example

The telnet command allow you to communicate with a remote computer/server that is using the Telnet protocol. All Unix like operating systems including MS-Windows versions include Telnet Client. To start Telnet Client and to enter the Telnet prompt, run:To connect to remote server s0.cyberciti.org through port number 80, type:

telnet your-server-name-here www

telnet your-server-name-here 80

Sample outputs:

telnet s0.cyberciti.org 80

Trying 54.240.168.194...In this example, make range requests (0-1024 bytes) with s0.cyberciti.org to grab /images/misc/static/2012/11/ifdata-welcome-0.png, type:

Connected to d2m4hyssawyie7.cloudfront.net.

Escape character is '^]'.

GET /images/misc/static/2012/11/ifdata-welcome-0.png HTTP/1.1Sample outputs:

Host: s0.cyberciti.org

Range: bytes=0-1024

Where,

- Output section #1 - GET request.

- Output section #2 - HTTP Status: 206 partial content and range requests header response.

- Output section #3 - Binary data.

curl command

The curl command is a tool to transfer data from or to a server. It support HTTP/FTPSFTP/FILE retrieval using a byte range i.e a partial document from a HTTP/1.1, FTP or SFTP server or a local FILE. Ranges can be specified in a number of ways. In this example, retrieve ifdata-welcome-0.png using two ranges and assemble it locally using standard Unix commands:Or use the -r option (pass -v option to see headers):

curl --header "Range: bytes=0-20000" http://s0.cyberciti.org/images/misc/static/2012/11/ifdata-welcome-0.png -o part1

curl --header "Range: bytes=20001-36907" http://s0.cyberciti.org/images/misc/static/2012/11/ifdata-welcome-0.png -o part2

cat part1 part2 >> test1.png

gnome-open test1.png

curl -r 0-20000 http://s0.cyberciti.org/images/misc/static/2012/11/ifdata-welcome-0.png -o part1

curl -r 20001-36907 http://s0.cyberciti.org/images/misc/static/2012/11/ifdata-welcome-0.png -o part2

cat part1 part2 >> test2.png

gnome-open test2.png

How do I enable Accept-Ranges header?

Most web server supports the Byte-Range request out of the box. Apache 2.x user try mod_headers in httpd.conf:Header set Accept-Ranges bytesLighttpd user try the following configuration in lighttpd.conf:

## enabled for all file types ##

server.range-requests = "enable"

## But, disable it for pdf files ##

$HTTP["url"] =~ "\.pdf$" {

server.range-requests = "disable"

}

Not a fan of command line interfaces?

You can view HTTP headers of a page and while browsing. Try the following add-ons:- Download Firefox - live http header.

- Download Google Chrome - live http header.

- Guide: Apple Safari - developer tools to view HTTP header.

The

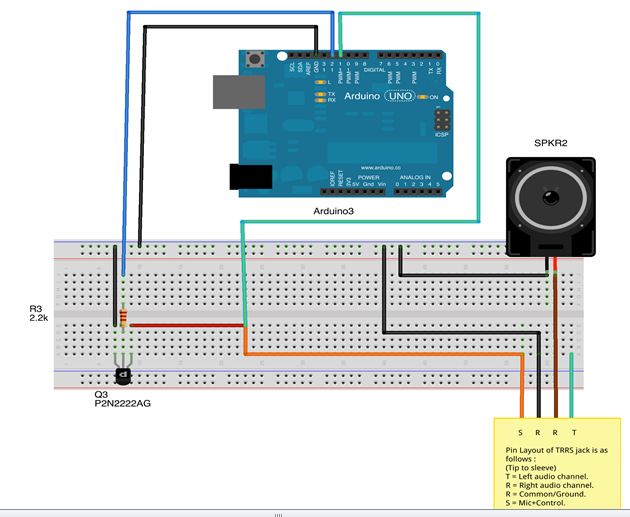

The  Arduino

Arduino BeagleBone

BeagleBone The Adafruit

The Adafruit

The i-Racer is a remote-controlled car that's ready to drive right out of the box. The Bluetooth radio allows you to pair it with an Android device as the controller (or you can build your own controller). Get it

The i-Racer is a remote-controlled car that's ready to drive right out of the box. The Bluetooth radio allows you to pair it with an Android device as the controller (or you can build your own controller). Get it  The

The  The

The