http://blog.smartbear.com/software-quality/bid/256072/13-reasons-your-open-source-docs-make-people-want-to-scream

Most open source developers like to think about the quality of the software they build, but the quality of the documentation is often forgotten. Nobody talks about how great a project’s docs are, and yet documentation has a direct impact on your project’s success. Without good documentation, people either do not use your project, or they do not enjoy using it. Happy users are the ones who spread the news about your project – which they do only after they understand how it works, which they learn from the software’s documentation. Yet, too many open source projects have disappointing documentation. And it can be disappointing in several ways.

The examples I give below are hardly authoritative, and I don’t mean to pick on any particular project. They're only those that I've used recently, and not meant to be exemplars of awfulness. Every project has committed at least a few of these sins. See how many your favorite software is guilty of (whether you are user or developer), and how many you personally can help fix.

1. Lacking a good README or introduction

The README is the first impression that potential users get about your project. If the project is on GitHub, the README is automatically displayed on the project home page. If you get it wrong, they might never come back. You want to grab the reader's attention and encourage them to continue investigating the project.

The README should explain, at least:

- What the project does

- Whom it's for

- On what hardware or other platform it runs

- Any major dependencies, such as "Requires Python 2.6 and libxml"

- How to install it, or a pointer to in-depth directions

All this has to be written for someone who never heard of your project before, and may never even have considered something like your project. If you've got a module that calculates Levenshtein distance, don't assume that everyone reading your README knows what that is. Explain that the Levenshtein distance is used to compare differences between strings, and link to more detailed explanation for someone who wants to explore its uses.

Don't describe your project in relation to another project, such as "NumberDoodle is like BongoCalc, but better!" That's no help to someone who's never heard of BongoCalc.

2. Docs not available online

Although I haven't seen any studies on the topic, I'd bet that 90% of documentation lookups are done with Google and a browser over the Internet. Your project's docs have to be online and available. Given that, it's embarrassing that my own project,

ack, would neglect having the docs available where most people would look for them. My assumption was based on my own use case, that if I want to know how a command line tool works, I'll check its man page.

How was this brought to my attention? Users wrote to me asking questions that were answered in the FAQ, which made me annoyed that they weren't reading my FAQ. Turns out they were looking at the website, but I hadn't posted the FAQ there. It's an easy mistake to make. I'm close to the project and I never actually use the FAQ myself, so I don't notice it missing online. Many problems fall into this trap: Authors not putting themselves in the users' shoes.

3. Docs only available online

The flipside of this problem is to have the documentation

only online. Some projects do not ship the documentation with the project's deliverables, or include a substandard version of the docs.

The search engine

Solr, for example, has an excellent wiki that is effectively the project’s documentation. Unfortunately, the docs that ship with the download are 2,200 pages of autogenerated API Javadocs. The only documentation for the end user is a single page tutorial.

The PHP language doesn't ship with any documentation. If you want the docs, you have to go to

a separate page to get them. Worse, only the core docs are available for download, without the helpful annotations from users (see "Not accepting user input" below), and they're not in the same easy-to-navigate format that's available online.

Open source projects can't assume that users have Internet access when they need docs. Airplane mode still exists. Even then, you don't want the user to rely on your project's website being up. At least twice over the past few months I've found the Solr wiki to be down in the middle of the workday while I was hunting for information on a tricky configuration problem.

One project that gets it right is Perl and its CPAN module repository. Documentation for each module is available at either

search.cpan.org or

metacpan.org in an easy-to-read hyperlinked format. For offline needs, the documentation for each module is embedded in the code itself, and when the module is installed on a user's system, local documentation is created as man pages. Users can also use

`perldoc Module::Name` to get the docs from the shell. Online or offline: It's your choice.

4. Docs not installed with the package

This problem is usually a failing of the package creators, not the project authors. For example, in Ubuntu Linux, the documentation for the Perl language is a separate, optional package from the language itself. The user must know she has to explicitly install the documentation as well as the core language or she won't have the documentation when she needs it. This trade-off of a few megabytes of disk space at the expense of documentation-at-hand on the user's system serves everyone poorly.

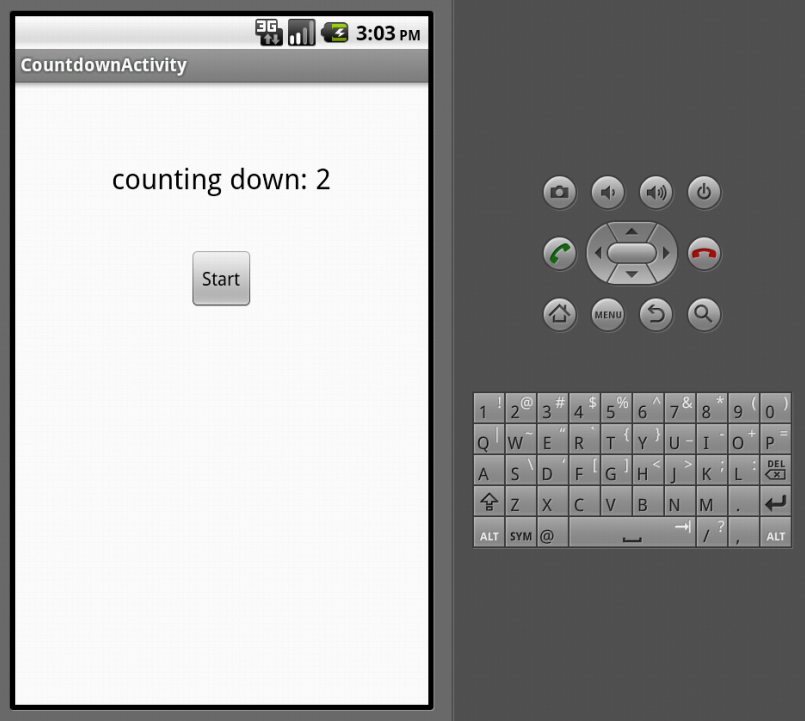

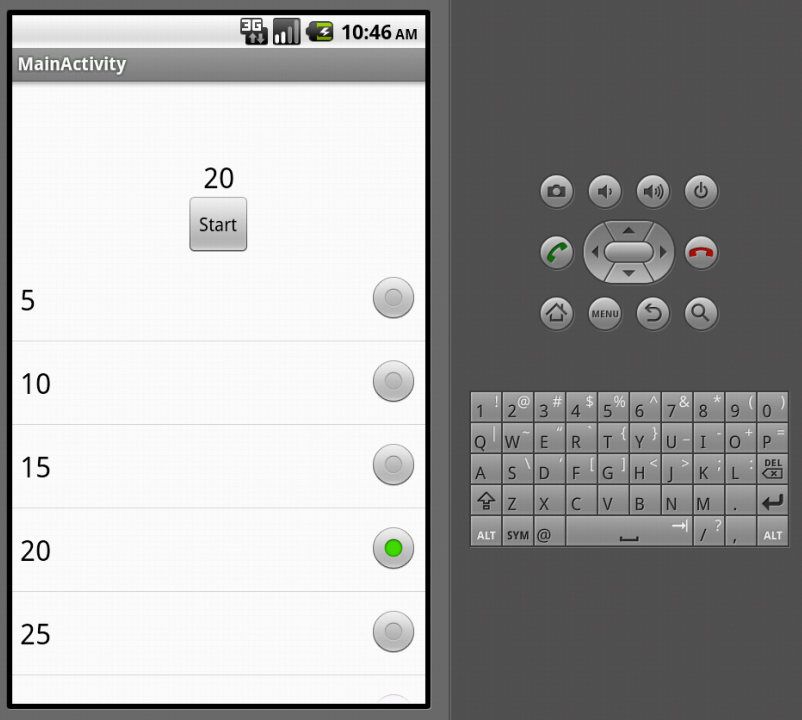

5. Lack of screenshots

![describe the image]()

There's no better way to grab the potential user's attention, or to illustrate proper usage, than with judicious screenshots. A picture is worth a thousand words. That’s even more important on the Internet because you may not get the reader to read more than a few hundred of your words at all.

Screenshots are also invaluable for the user following along with the prose, trying to make something work right. A screenshot lets him visually compare his results to those in the docs to reassure himself that he's done a task correctly or to easily find what's not right.

It's becoming more common to have videos on the website giving an overview of the project, and those are great. So are in-depth videos that show the steps of a complex process. The Plone project, for example, has an entire site dedicated to

video tutorials. However, videos can’t take the place of screenshots. A user wants to see quickly what the screens look like without sitting through a video. Videos also don’t show up in a Google image search, as screenshots do.

6. Lack of realistic examples

For code-based projects, the analog of screenshots are good, solid examples of the code in use. These examples should not be abstract, but direct from the real world. Don’t create throwaway examples full of “demo name here” and

lorum ipsum. Take the time to create a relevant example with a user story that represents how the software solves a problem.

There's a reason we have story problems in math class: They help us apply what was taught.

Say I've written a web robot module, and I'm explaining the follow_link method. I might show the method definition like this:

$mech->follow_link( text_regex => $regex_object, n => $link_index ); But look how obvious it becomes when adding some reality in an example.

# Follow the 2nd link matching the string "download"

$mech->follow_link( text_regex => qr/download/, n => 2 ); The abstract placeholder variable names

$regex_object and

$link_index now have some grounding in the mind of the reader.

Of course, your examples shouldn't just be brief two-line snippets. As

Rich Bowen of the Apache project puts it, "One correct, functional, tested, commented example trumps a page of prose, every time."

Show as much as you can. Space is cheap. Make a dedicated section of documentation for examples, or even a cookbook. Ask users to send in working code, and publish their best examples.

7. Inadequate links and references

You have hyperlinks. Use them.

Don't assume that because something is explained in one part of the docs that the reader has already read that part, or even knows where it is. Don't just mention that this part of the code manipulates frobbitz objects. Explain briefly on the first use of the term what a frobbitz object is, or link to the section of the manual that explains what a frobbitz is. Best of all, do both!

8. Forgetting the new user

It's sometimes easy when writing the docs to write them from the perspective of you, the author of the software. New users need introductory documentation to ease them in.

The introduction should be a separate page of documentation, ideally with examples that let the new user get some success with the software. Think about the excitement you feel when you start playing with a new piece of software and you get it to do something cool. Make that happen for your new users, too.

For example, a graphing package might present a series of screenshots that show how to add data to a file, invoke the grapher, and then show the resulting graphs. A code library might show some examples of calling the library, and then show the resulting output. Keep it simple. Give an easy win. The text should introduce terms at the appropriate places, with links to more detailed documentation about the term.

A separate document for these sorts of introductory ideas gives the user a quick understanding of the software. It also keeps the introductory explanations out of the main part of your docs.

![Not listening to users]()

9. Not listening to the users

Project owners must listen to the users of the documentation. The obvious element is listening to suggestions and requests from people who are actively using your software. When a user takes the time to mail or post something like, "It would have helped me install the program if there had been an explanation or links to how to install database drivers," take that message seriously. For every one user who emails you about a problem, you can expect that ten others don't say anything but still have the same problem.

Just as important, however, is listening to user problems, and considering the reasons behind them. If people frequently have trouble figuring out how to perform bulk database updates, the first course of action is to add a question to the FAQ (you

do have an FAQ, right?) that addresses bulk database updates. However, the question may also be an indication that the section on database updates isn't written clearly enough. Or perhaps there isn't a pointer to that section from the introductory overview document, so your users never know to read the rest of the manual.

Besides helping more people discover how useful your project is, this also eases frustration on the part of the project’s existing community. If your mailing list, forum or IRC channel is filled with people who ask the same “dumb” (or not-so-dumb) questions all the time that everyone gets tired of responding to, recognize that these

are the Frequently Asked Questions, and putting the answers in a single find-able spot helps everyone focus on the fun stuff.

Keep an eye on user questions in outside forums, too. Search sites like

StackOverflow regularly, and set up a

Google Alert for your project name to be kept aware of how your project is being discussed on the Internet.

10. Not accepting user input

If your project has a large enough user base, it may make sense to incorporate user comments directly into the documentation. The best example I've seen of this is for the PHP language. Each page of the documentation allows authenticated users to add comments to the page, to help clarify points, or to add examples that aren't in the core docs. The PHP team also gives the reader the choice of displaying documentation with or without user comments.

As useful as this is, it requires maintenance. Comments should be weeded over time to prevent overgrowth. For example, the PHP documentation page for

how to invoke PHP from the command line includes 43 comments from users dating back to 2001. The comments dwarf the core documentation. The comments should be archived or eliminated, with the most important points incorporated into the core documentation.

A wiki is also a good approach. However, if your wiki doesn't allow the user to download all the pages in one big batch (see item #3 above), then your users are at the mercy of your Internet connection and the web server that hosts the project.

11. No way to see what the software does without installing it

At the minimum, every software project needs a list of features and a page of screenshots to let the curious potential user why she should try it out. For the user shopping around for software packages to use, make it easy for him to see why it's worth the time to download and install it.

Pictures are a great way to do this. Your project should have a "Screenshots" page that shows real examples of the tool in use (see item #5 above). If the project is purely code, like a library, then there should be an example page that shows code using the project.

12. Relying on technology to do your writing

Too often, software authors use automated documentation systems to do their work. They forget about the part where they have to write prose. The automated system can make things easier to maintain, but it doesn't obviate the need for human writing.

The worst case of this is

changelogs that are nothing but a dump of commit messages from the version control system, but with no top-level summary that explains it. A changelog should list new features, bugs fixed, and potential incompatibilities, and its target audience is the end user. A commit log is for people working on the project itself; it's easy to generate, but it's not what users need.

Take a look at

this page from the docs for Solarium, a PHP interface to the Solr search engine. First, the disclaimer takes up the top half of the screen, giving no information to the reader at all. Second, literally nothing on the page is any more descriptive than a list of the function names. The "METHOD_FC" enum means "Facet method fc". But what is FC? There is no explanation of the different facet methods, nor links to how one would find out. The automatically generated pages look nice, and they may feel like documentation, but they're really not.

13. Arrogance and hostility toward the user

The attitude of RTFM (Read the Freaking Manual) is toxic to your project and to your documentation.

It is the height of arrogance to assume that all problems that relate to someone not knowing how to use your project are the fault of the user. It is even worse to assign a moral failing of "They're just too lazy to read," which you can't possibly know. Most of us have encountered these attitudes as users. Why would you inflict them upon the people who want to use the software you create?

Even if it's provably true that users could find their answers in your documentation but they aren't doing so, it's foolish to assume that it's because the user has failed in some way. Maybe your documentation is poorly written, or hard to read, or presented poorly on the screen. Perhaps you need to improve the Getting Started section (item #8 above) that explains what the software aims to do Maybe some bits of information need to be repeated in multiple parts of the docs. Maybe it's not made clear to the reader where to find certain knowledge tidbits.

You

know that new users of your software come to your project knowing nothing. Your project documentation team can do its best to ensure that ignorance is easily curable.

Wrap-up

I'm sure you have come across many of these problems with docs, and I hope there are some you haven't thought of. Let us know about the problems that bother you in the comments below. I don't mean to point fingers at any given project’s examples, since every open source project has some problems or another.

Most of all, I hope that if you recognize a problem in your documentation on projects you're involved with, that you consider this a nudge to take action to improve the situation. Fortunately, improving documentation is an ideal way to get newcomers involved with your project. I’m often asked “How can I get started in open source”, and

I recommend documentation improvements as a good way to start.

Make it as simple as possible for contributors, whether novice or veteran, to know where your docs need help. Create a list of tasks, possibly in your bug tracking system, that explain what needs help. Be specific in what your needs are. Don’t just say, “We need more examples.” Create specific tasks, like “Add example code of how to do Task X,” “Add screen shots of the report generator screens,” or “Add dependency information to the README.” Contributors want to help, but are often stymied by not knowing where to begin.

Docs aren't the most glamorous part of any open source project, and for most of us they're not fun, but without good documentation, users aren't served as well as they could be, and your project will suffer in the long run.

There's no better way to grab the potential user's attention, or to illustrate proper usage, than with judicious screenshots. A picture is worth a thousand words. That’s even more important on the Internet because you may not get the reader to read more than a few hundred of your words at all.

There's no better way to grab the potential user's attention, or to illustrate proper usage, than with judicious screenshots. A picture is worth a thousand words. That’s even more important on the Internet because you may not get the reader to read more than a few hundred of your words at all.