http://www.linuxjournal.com/node/1338711?utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+linuxjournalcom+%28Linux+Journal+-+The+Original+Magazine+of+the+Linux+Community%29&hootPostID=b66b3eb849d4698dd512beafb71b7602

Lightweight virtual containers with PID 1.

In this article, I demonstrate a method to build one Linux system within another using the latest utilities within the systemd suite of management tools. The guest OS container design focuses upon BusyBox and Dropbear for the userspace system utilities, but I also work through methods for running more general application software so the containers are actually useful.

This tutorial was developed on Oracle Linux 7, and it likely will run unchanged on its common brethren (Red Hat, CentOS, Scientific Linux), and from here forward, I refer to this platform simply as V7. Slight changes may be necessary on other systemd platforms (such as SUSE, Debian or Ubuntu). Oracle's V7 runs only on the x86_64 platform, so that's this article's primary focus.

Required Utilities

Red Hat saw fit to remove the long-included BusyBox binary from its V7 distribution, but this easily is remedied by downloading the latest binary directly from the project's Web site. Since the /home filesystem gets a large amount of space by default when installing V7, let's put it there for now. Run the commands below as root until indicated otherwise:

cd /home

wget http://busybox.net/downloads/binaries/latest/busybox-x86_64

You also can get a binary copy of the Dropbear SSH server and client from this location:

wget http://landley.net/aboriginal/downloads/

↪binaries/extras/dropbearmulti-x86_64

For this article, I used the following versions:

- BusyBox v1.21.1.

- Dropbear SSH multi-purpose v2014.63.

These are static binaries that do not link against shared objects—nothing else is required to run them, and they are ideal for building a new UNIX-ish environment quickly.

Build a chroot

The chroot system call and the associated shell utility allow an arbitrary subdirectory somewhere on the system to be declared as the root for all child processes. The commands below populate the "chroot jail", then lock you in. Note that the call to chroot needs your change to the SHELL environment variable below, as you don't have bash inside the jail (and it's likely the default value of $SHELL):

export SHELL=/bin/sh

mkdir /home/nifty

mkdir /home/nifty/bin

cd /home/nifty/bin

cp /home/busybox-x86_64 /home/dropbearmulti-x86_64 .

chmod 755 busybox-x86_64 dropbearmulti-x86_64

./busybox-x86_64 --list | awk '{print "ln -s

↪busybox-x86_64 " $0}' | sh

chroot /home/nifty

export PATH=/bin

ls -l

###(try some commands)

exit

Take some time to explore your shell environment after you launch your chroot above before you exit. Notice that you have a /bin directory, which is populated by soft links that resolve to the BusyBox binary. BusyBox changes its behavior depending upon how it is called—it bundles a whole system of utility programs into one convenient package.

Try a few additional UNIX commands that you may know. Some that work are

vi,

uname,

uptime and (of course) the shell that you are working inside. Commands that don't work include

ps,

top and

netstat. They fail because they require the /proc directory (which is dynamically provided by the Linux kernel)—it has not been mounted within the jail.

Note that few native utilities will run in the chroot without moving many dependent libraries (objects). You might try copying bash or gawk into the jail, but you won't be able to run them (yet). In this regard, BusyBox is ideal, as it depends upon nothing.

Build a Minimal UNIX System and Launch It

The systemd suite includes the eponymous program that runs as PID 1 on Linux. Among many other utilities, it also includes the

nspawn program that is used to launch containers. Containers that are created by

nspawn fix most of the problems with chroot jails. They provide /proc, /dev, /run and otherwise equip the child environment with a more capable runtime.

Next, you are going to configure a getty to run on the console of the container that you can use to log in. Being sure that you have exited your chroot from the previous step, run the following commands as root:

mkdir /home/nifty/etc

mkdir /home/nifty/root

echo 'NAME="nifty busybox container"'>

↪/home/nifty/etc/os-release

cd /home/nifty

ln -s bin sbin

ln -s bin usr/bin

echo 'root::0:0:root:/root:/bin/sh'>

↪/home/nifty/etc/passwd

echo 'console::respawn:/bin/getty 38400 /dev/console'>

↪/home/nifty/etc/inittab

tar cf - /usr/share/zoneinfo | (cd /home/nifty; tar xvpf -)

systemd-nspawn -bD /home/nifty

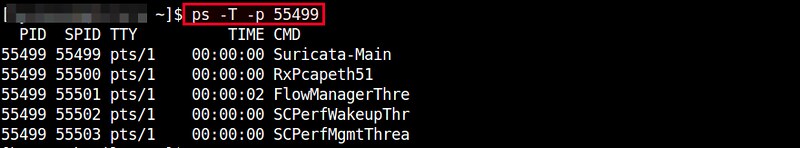

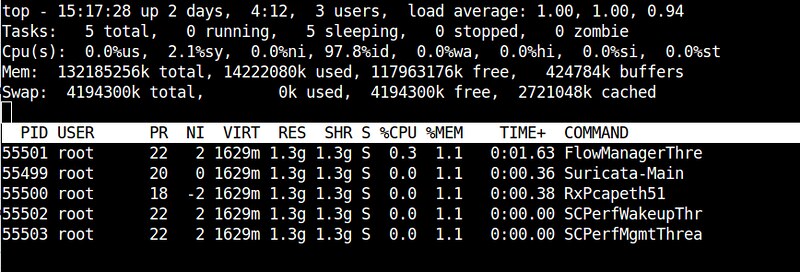

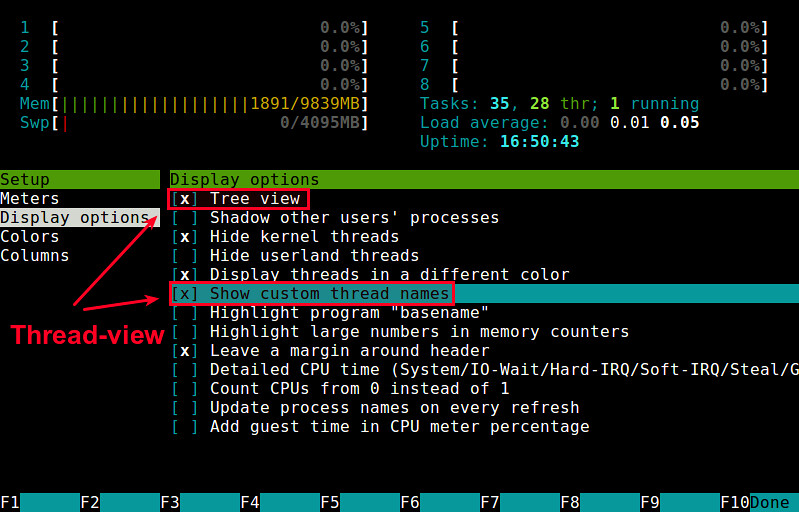

After you have executed the

nspawn above, you will be presented with a "nifty login" prompt. Log in as root (there is no password—yet), and try a few more commands. You immediately will notice that

psand

top work, and there is now a /proc.

You also will notice that the processes that appear in the child container also appear on the host system, but different PIDs will be assigned between the parent and child.

Note that you'll also receive the message: "The kernel auditing subsystem is known to be incompatible with containers. Please make sure to turn off auditing with 'audit=0' on the kernel command line before using systemd-nspawn. Sleeping for 5s..." The audit settings don't seem to impact the BusyBox container login, but you can adjust your kernel command line in your grub configuration (at least to silence the warning and stop the delay).

Running Dropbear SSH in Your Container

It's best if you configure a non-root user of your system and forbid network root logins. The reasoning will become clear when I address container security.

Run all of these commands as root within the container:

cd /bin

ln -s dropbearmulti-x86_64 dropbear

ln -s dropbearmulti-x86_64 ssh

ln -s dropbearmulti-x86_64 scp

ln -s dropbearmulti-x86_64 dropbearkey

ln -s dropbearmulti-x86_64 dropbearconvert

Above, you have established the names that you need to call Dropbear, both the main client and server, and the sundry key generation and management utilities.

You then generate the host keys that will be used by this container, placing them in a new directory /home/nifty/etc/dropbear (as viewed by the host):

mkdir /etc/dropbear

dropbearkey -t rsa -f /etc/dropbear/dropbear_rsa_host_key

dropbearkey -t dss -f /etc/dropbear/dropbear_dss_host_key

dropbearkey -t ecdsa -f /etc/dropbear/dropbear_ecdsa_host_key

Various directories are then created that you will need shortly:

mkdir -p /var/log/lastlog

mkdir /home

mkdir /var/run

mkdir /tmp

mkdir /var/tmp

chmod 01777 /tmp /var/tmp

You then create the inittab, which will launch syslogd and Dropbear once at startup (in addition to the existing getty that is respawned whenever it dies):

echo ::sysinit:/bin/syslogd >> /etc/inittab

echo '::sysinit:/bin/dropbear -w -p 2200'>> /etc/inittab

Next, you add a shadow file and create a password for root:

echo root:::::::: > /etc/shadow

chmod 600 /etc/shadow

echo root:x:0: > /etc/group

passwd -a x root

Note that the BusyBox

passwd call used here generated an MD5 hash—there is a $1$ prefix in the second field of /etc/shadow for root. Additional hashing algorithms are available from this version of the passwd utility (the options

-a s will generate a $5$ SHA256 hash, and

-a sha512 will generate a $6$ hash). However, Dropbear seems to be able to work only with $1$ hashes for now.

Finally, add a new user to the system, and then halt the container:

adduser -h /home/luser -D luser

passwd -a x luser

halt

You should see container shutdown messages that are similar to a system halt.

When you next start your container, it will listen on socket 2200 for connections. If you want remote hosts to be able to connect to your container from anywhere on the network, run this command as root on the host to open a firewall port:

iptables -I INPUT -p tcp --dport 2200 --syn -j ACCEPT

The port will be open only until you reboot. If you'd like the open port to persist across reboots, use the

firewall-config command from within the X Window System (set the port on the second tab in the GUI).

In any case, run the container with the previous

nspawn syntax, then try to connect from another shell within the parent host OS with the following:

ssh -l luser -p 2200 localhost

You should be able to log in to the luser account under a BusyBox shell.

Executing Programs with Runtime Dependencies

If you copy various system programs from /bin or /usr/bin into your container, you immediately will notice that they don't work. They are missing shared objects that they need to run.

If you had previously copied the gawk binary in from the host:

cp /bin/gawk /home/nifty/bin/

you would find that attempts to execute it fail with "gawk: not found" errors (on the host, there usually will be explicit complaints about missing shared objects, which are not seen in the container).

You easily can make most of the 64-bit libraries available with an argument to

nspawn that establishes a bind mount:

systemd-nspawn -bD /home/nifty --bind-ro=/usr/lib64

Then, from within the container, run:

cd /

ln -s usr/lib64 lib64

You then will find that many 64-bit binaries that you copy in from the host will run (running

/bin/gawk -V returns "GNU Awk 4.0.2"—an entire Oracle 12c instance is confirmed to run this way). The read-only library bind mount also has the benefit of receiving security patches immediately when they appear on the host.

There is a significant security problem with this, however. The root user in the container has the power to

mount -o remount,rw /usr/lib64 and, thus, gain write access to your host library directories. In general, you cannot give root to a container user that you don't know and trust—among other problems, these mounts can be abused.

You also might be tempted to mount the /usr/lib directory in the same manner. The difficulty you will find is that the systemd binary will be found under that directory tree, and

nspawn will try to execute it in preference to BusyBox init. Enabling 32-bit runtime support likely will involve more directory and mounting gymnastics than was required for /usr/lib64.

And now, I'm going off on a tangent.

systemd Service Files

You will need to call on the host PID 1 (systemd) directly to launch your container in an automated manner, potentially at boot. To do this, you need to create a service file.

Because there is a dearth of clear discussion on moving inittab and service functions into systemd, I'll cover all the basic uses before creating a service file for the container.

Start by configuring a telnet server. The telnet protocol is not secure, as it transmits passwords in clear text. Don't practice these examples on a production server or with sensitive information or accounts.

Classical telnetd is launched by the inetd superserver, both of which are implemented by BusyBox. Let's configure inetd for telnet on port 12323. Run the following as root on the host:

echo '12323 stream tcp nowait root

↪/home/nifty/bin/telnetd telnetd -i -l

/home/nifty/bin/login'>> /etc/inetd.conf

After the configuring above, if you manually launch the inetd contained in BusyBox, you will be able to telnet to port 12323. Note that the V7 platform does not include a telnet client by default, so you either can install it with yum or use the BusyBox client (which the example below will do). Unless you open up port 12323 on your firewall, you will have to telnet to localhost.

Make sure any inetd that you started is shut down before proceeding to create an inetd service file below:

echo '[Unit]

Description=busybox inetd

#After=network-online.target

Wants=network-online.target

[Service]

#ExecStartPre=

#ExecStopPost=

#Environment=GZIP=-9

#OPTION 1

ExecStart=/home/nifty/bin/inetd -f

Type=simple

KillMode=process

#OPTION 2

#ExecStart=/home/nifty/bin/inetd

#Type=forking

#Restart=always

#User=root

#Group=root

[Install]

WantedBy=multi-user.target'>

↪/etc/systemd/system/inetd.service

systemctl start inetd.service

After starting the inet service above, you can check the status of the dæmon:

[root@localhost ~]# systemctl status inetd.service

inetd.service - busybox inetd

Loaded: loaded (/etc/systemd/system/inetd.service; disabled)

Active: active (running) since Sun 2014-11-16 12:21:29 CST;

↪28s ago

Main PID: 3375 (inetd)

CGroup: /system.slice/inetd.service

↪3375 /home/nifty/bin/inetd -f

Nov 16 12:21:29 localhost.localdomain systemd[1]: Started

↪busybox inetd.

Try opening a telnet session from a different console:

/home/nifty/bin/telnet localhost 12323

You should be presented with a login prompt:

Entering character mode

Escape character is '^]'.

S

Kernel 3.10.0-123.9.3.el7.x86_64 on an x86_64

localhost.localdomain login: jdoe

Password:

Checking the status again, you see information about the connection and the session activity:

[root@localhost ~]# systemctl status inetd.service

inetd.service - busybox inetd

Loaded: loaded (/etc/systemd/system/inetd.service; disabled)

Active: active (running) since Sun 2014-11-16 12:34:04 CST;

↪7min ago

Main PID: 3927 (inetd)

CGroup: /system.slice/inetd.service

↪3927 /home/nifty/bin/inetd -f

↪4076 telnetd -i -l /home/nifty/bin/login

↪4077 -bash

You can learn more about systemd service files with the

man 5 systemd.service command.

There is an important point to make here—you have started inetd with the "-f Run in foreground" option. This is not how inetd normally is started—this option is commonly used for debugging activity. However, if you were starting inetd with a classical inittab entry,

-f would be useful in conjunction with "respawn". Without

-f, inetd immediately will fork into the background; attempting to respawn forking dæmons will launch them repeatedly. With

-f, you can configure init to relaunch inetd should it die.

Another important point is stopping the service. With a foreground dæmon and the

KillMode=process setting in the service file, the child telnetd services are not killed when the service is stopped. This is not the normal, default behavior for a systemd service, where all the children will be killed.

To see this mass kill behavior, comment out the

OPTION 1 settings in the service file (/etc/systemd/system/inetd.service), and enable the default settings in

OPTION 2. Then execute:

systemctl stop inetd.service

systemctl daemon-reload

systemctl start inetd.service

Launch another telnet session, then stop the service. When you do, your telnet sessions will all be cut with "Connection closed by foreign host." In short, the default behavior of systemd is to kill all the children of a service when a parent dies.

The

KillMode=process setting can be used with the forking version of inetd, but the "-f Run in foreground" in the first option is more specific and, thus, safer.

You can learn more about the

KillMode option with the

man 5 systemd.killcommand.

Note also that the

systemctl status output included the word "disabled". This indicates that the service will not be started at boot. Pass the

enable keyword to

systemctl for the service to set it to launch at boot (the

disable keyword will undo this).

Make some note of the commented options above. You may set environment variables for your service (here suggesting a compression quality), specify a non-root user/group and commands to be executed before the service starts or after it is halted. These capabilities are beyond the direct features offered by the classical inittab.

Of course, systemd is capable of spawning telnet servers directly, allowing you to dispense with inetd altogether. Run the following as root on the host to configure systemd for BusyBox telnetd:

systemctl stop inetd.service

echo '[Unit]

Description=mytelnet

[Socket]

ListenStream=12323

Accept=yes

[Install]

WantedBy=sockets.target'>

↪/etc/systemd/system/mytelnet.socket

echo '[Unit]

Description=mytelnet

[Service]

ExecStart=-/home/nifty/bin/telnetd telnetd -i -l

↪/home/nifty/bin/login

StandardInput=socket'>

↪/etc/systemd/system/mytelnet@.service

systemctl start mytelnet.socket

Some notes about inetd-style services:

- The socket is started, rather than the service, when inetd services are launched. Similarly, they are enabled to set them to launch at boot.

- The @ character in the service file indicates this is an "instantiated" service. They are used when a number of similar services are launched with a single service file (getty being the prime example—they also work well for Oracle database instances).

- The - prefix above in the path to the telnet server indicates that systemd should not pay attention to any stats return codes from the process.

- In the client telnet sessions, the command

cat /proc/self/cgroup will return detailed connection information for the IP addresses involved.

At this point, I have returned from my long-winded tangent, so now let's build a service file for the container. Run the following as root on the host:

echo '[Unit]

Description=nifty container

[Service]

ExecStart=/usr/bin/systemd-nspawn -bD /home/nifty

KillMode=process'> /etc/systemd/system/nifty.service

Be sure that you have shut down any other instances of the nifty container. You optionally can disable the console getty by commenting/removing the first line of /home/nifty/etc/inittab. Then use PID 1 to launch your container directly:

systemctl start nifty.service

If you check the status of the service, you will see the same level of information that you previously saw on the console:

[root@localhost ~]# systemctl status nifty.service

nifty.service - nifty container

Loaded: loaded (/etc/systemd/system/nifty.service; static)

Active: active (running) since Sun 2014-11-16 14:06:21 CST;

↪31s ago

Main PID: 5881 (systemd-nspawn)

CGroup: /system.slice/nifty.service

↪5881 /usr/bin/systemd-nspawn -bD /home/nifty

Nov 16 14:06:21 localhost.localdomain systemd[1]: Starting

↪nifty container...

Nov 16 14:06:21 localhost.localdomain systemd[1]: Started

↪nifty container.

Nov 16 14:06:26 localhost.localdomain systemd-nspawn[5881]:

↪Spawning namespace container on /home/nifty

↪(console is /dev/pts/4).

Nov 16 14:06:26 localhost.localdomain systemd-nspawn[5881]:

↪Init process in the container running as PID 5883.

Memory and Disk Consumption

BusyBox is a big program, and if you are running several containers that each have their own copy, you will waste both memory and disk space.

It is possible to share the "text" segment of the BusyBox memory usage between all running programs, but only if they are running on the same inode, from the same filesystem. The text segment is the read-only, compiled code of a program, and you can see the size like this:

[root@localhost ~]# size /home/busybox-x86_64

text data bss dec hex filename

942326 29772 19440 991538 f2132 /home/busybox-x86_64

If you want to conserve the memory used by BusyBox, one way would be to create a common /cbin that you attach to all containers as a read-only bind mount (as you did previously with lib64), and reset all the links in /bin to the new location. The root user could do this:

systemctl stop nifty.service

mkdir /home/cbin

mv /home/nifty/bin/busybox-x86_64 /home/cbin

mv /home/nifty/bin/dropbearmulti-x86_64 /home/cbin

cd /

ln -s home/cbin cbin

cd /home/nifty/bin

for x in *; do if [ -h "$x" ]; then rm -f "$x"; fi; done

/cbin/busybox-x86_64 --list | awk '{print "ln -s

↪/cbin/busybox-x86_64 " $0}' | sh

ln -s /cbin/dropbearmulti-x86_64 dropbear

ln -s /cbin/dropbearmulti-x86_64 ssh

ln -s /cbin/dropbearmulti-x86_64 scp

ln -s /cbin/dropbearmulti-x86_64 dropbearkey

ln -s /cbin/dropbearmulti-x86_64 dropbearconvert

You also could arrange to bind-mount the zoneinfo directory, saving a little more disk space in the container (and giving the container patches for time zone data in the bargain):

cd /home/nifty/usr/share

rm -rf zoneinfo

Then the service file is modified to bind /cbin and /usr/share/zoneinfo (note the altered syntax for sharing /cbin below, when the paths differ between host and container):

echo '[Unit]

Description=nifty container

[Service]

ExecStart=/usr/bin/systemd-nspawn -bD /home/nifty

--bind-ro=/home/cbin:/cbin --bind-ro=/usr/share/zoneinfo

KillMode=process'> /etc/systemd/system/nifty.service

systemctl daemon-reload

systemctl start nifty.service

Now any container using the BusyBox binary from /cbin will share the same inode. All versions of the BusyBox utilities running in those containers will share the same text segment in memory.

Infinite BusyBox

It might interesting to launch tens, hundreds, or even thousands of containers at once. You could launch the clones by making copies of the /home/nifty directory, then adjusting the systemd service file. To simplify, you will place your new containers in /home/nifty1, /home/nifty2, /home/nifty3 ... using integer suffixes on the directories to differentiate them.

Please make sure that you have disabled kernel auditing to remove the five-second delay when launching containers. At the very least, press e at the grub menu at boot time, and add the

audit=0 to your kernel command line for a one-time boot.

I'm going to return to the subject of systemd "instantiated services" that I touched upon with the telnetd service file that replaced inetd. This technique will allow you to use one service file to launch all of your containers. Such a service has an @ character in the filename that is used to refer to a particular, differentiated instance of a service, and it allows the use of the %i placeholder within the service file for variable expansion. Run the following on the host as root to place your service file for instantiated containers:

echo '[Unit]

Description=nifty container # %i

[Service]

ExecStart=/usr/bin/systemd-nspawn -bD /home/nifty%i

↪--bind-ro=/home/cbin:/cbin --bind-ro=/usr/share/zoneinfo

KillMode=process'> /etc/systemd/system/nifty@.service

The

%i above first adjusts the description, then adjusts the launch directory for the

nspawn. The content that will replace the

%i is specified on the

systemctl command line.

To test this, make a directory called /home/niftyslick. The service file doesn't limit you to numeric suffixes. You will adjust the SSH port after the copy. Run this as root on the host:

cd /home

mkdir niftyslick

(cd nifty; tar cf - .) | (cd niftyslick; tar xpf -)

sed "s/2200/2100/"< nifty/etc/inittab > niftyslick/etc/inittab

systemctl start nifty@slick.service

Bearing this pattern in mind, let's create a script to produce these containers in massive quantities. Let's make a thousand of them:

cd /home

for x in $(seq 1 999)

do

mkdir "nifty${x}"

(cd nifty; tar cf - .) | (cd "nifty${x}"; tar xpf -)

sed "s/2200/$((x+2200))/"< nifty/etc/inittab >

↪nifty${x}/etc/inittab

systemctl start nifty@${x}.service

done

As you can see below, this test launches all containers:

$ ssh -l luser -p 3199 localhost

The authenticity of host '[localhost]:3199 ([::1]:3199)'

↪can't be established.

ECDSA key fingerprint is 07:26:15:75:7d:15:56:d2:ab:9e:

↪14:8a:ac:1b:32:8c.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '[localhost]:3199' (ECDSA)

↪to the list of known hosts.

luser@localhost's password:

~ $ sh --help

BusyBox v1.21.1 (2013-07-08 11:34:59 CDT) multi-call binary.

Usage: sh [-/+OPTIONS] [-/+o OPT]... [-c 'SCRIPT'

↪[ARG0 [ARGS]] / FILE [ARGS]]

Unix shell interpreter

~ $ cat /proc/self/cgroup

10:hugetlb:/

9:perf_event:/

8:blkio:/

7:net_cls:/

6:freezer:/

5:devices:/

4:memory:/

3:cpuacct,cpu:/

2:cpuset:/

1:name=systemd:/machine.slice/machine-nifty999.scope

The output of systemctl will list each of your containers:

# systemctl

...

machine-nifty1.scope loaded active running Container nifty1

machine-nifty10.scope loaded active running Container nifty10

machine-nifty100.scope loaded active running Container nifty100

machine-nifty101.scope loaded active running Container nifty101

machine-nifty102.scope loaded active running Container nifty102

...

More detail is available with

systemctl status:

machine-nifty10.scope - Container nifty10

Loaded: loaded (/run/systemd/system/machine-nifty10.scope;

↪static)

Drop-In: /run/systemd/system/machine-nifty10.scope.d

↪90-Description.conf, 90-Slice.conf,

↪90-TimeoutStopUSec.conf

Active: active (running) since Tue 2014-11-18 23:01:21 CST;

↪11min ago

CGroup: /machine.slice/machine-nifty10.scope

↪2871 init

↪2880 /bin/syslogd

↪2882 /bin/dropbear -w -p 2210

Nov 18 23:01:21 localhost.localdomain systemd[1]:

↪Starting Container nifty10.

Nov 18 23:01:21 localhost.localdomain systemd[1]:

↪Started Container nifty10.

The raw number of containers that you can launch with this approach is more directly impacted by kernel limits than general disk and memory resources. Launching the containers above used no swap on a small system with 2GB of RAM.

After you have investigated a few of the containers and their listening ports, the easiest and cleanest way to get all of your containers shut down is likely a reboot.

Container Security

A number of concerns are raised with these features:

1) Since BusyBox and Dropbear were not installed with the RPM host package tools, updates to them will have to be loaded manually. It will be important to check from time to time if new versions are available and if any security flaws have been discovered. If it is necessary to load new versions, the binaries should be copied to all containers that are potentially used, which should then be restarted (especially if a security issue is involved).

2) Control of the root user in the container cannot be passed to an individual that you do not trust. For a particular example, if the lib64/cbin/zoneinfo bind mounts above are used, the container root user can issue the command:

mount -o remount,rw /usr/lib64

at which point the container root will have full write privileges on your 64-bit libraries, container bin or zoneinfo. The systemd-nspawn man page goes even further, with the warning:

Note that even though these security precautions are taken systemd-nspawn is not suitable for secure container setups. Many of the security features may be circumvented and are hence primarily useful to avoid accidental changes to the host system from the container. The intended use of this program is debugging and testing as well as building of packages, distributions and software involved with boot and systems management.

The crux is that untrusted users cannot have the container root, any more than you would give them full system root. The container root will have the CAP_SYS_ADMIN privilege, which allows full control of the system. If you want to isolate non-root users further, the container environment does limit non-root users' visibility into host activities, as they cannot see the full process table.

3) Note that the BusyBox su and passwd utilities above do not work when installed in the manner outlined here. They lack the appropriate filesystem permissions. To fix this,

chmod u+s busybox-x86_64could be executed, but this is also distasteful from a security perspective. Removing the links and copying the BusyBox binary to su and passwd before applying the setuid privilege might be better, but only slightly. It would be best if su was unavailable and another mechanism was found for password changes.

4) The

-w argument to the Dropbear SSH server above prevents root logins from the network. It is somewhat distasteful, from a security perspective, to relax this limitation. The net effect is that root is locked out of active use in the container when

-w is forced, and su/passwd do not have setuid. If it is at all possible to live with such an arrangement for your container, try to do so, as the security is much improved.

systemd Controversy

There is a high degree of hostility toward systemd from users of Linux. This hostility is divided into two main complaints:

- The classic inittab from UNIX System V should not be changed because it is well understood.

- Increasing features are bundled into systemd that bring dangerous complexity to a critical system process.

Toward the first point, nostalgia for legacy systems is not always misguided, but it cannot be allowed to hinder progress unreasonably. A classic System V init is not able to nspawn and has far less control over processes running on a system. The features delivered by systemd surely justify the inconvenience of change in many situations.

Toward the second point, much thought was placed into the adoption of the architecture of systemd by skilled designers from diverse organizations. Those most critical of the new environment should acknowledge the technical success of systemd as it is adopted by the majority of the Linux community.

In any case, the next decade will see popular Linux server distributions equipped with systemd, and competent administrators will not have the option of ignoring it. It is unfortunate that the introduction of systemd did not include more guidance for the user community, but the new features are compelling and should not be overlooked.

![]()

![]()

![]()