https://www.maketecheasier.com/edit-sudoers-file-linux

Within your Linux or macOS system, there’s a file called “sudoers” which controls the deepest levels of your permissions system. It permits or denies users from gaining super-user access and holds some special preferences for sudo.

![edit-sudoers-file-change-sudo-timeout-visudo-command edit-sudoers-file-change-sudo-timeout-visudo-command]()

Note that you need to use

![edit-sudoers-file-change-sudo-timeout-sudoer-file-in-vim edit-sudoers-file-change-sudo-timeout-sudoer-file-in-vim]()

1. Run

2. Press Alt + / to navigate to the end of the document. If you are using Vi or Vim, press Shift + G instead.

![edit-sudoers-file-change-sudo-timeout-jump-to-end edit-sudoers-file-change-sudo-timeout-jump-to-end]()

3. Create a new line at the bottom of the document and add the following line:

![edit-sudoers-file-change-sudo-timeout-add-default-timeout edit-sudoers-file-change-sudo-timeout-add-default-timeout]()

This will set your sudo timeout to zero seconds, so you’ll have sudo permissions for zero seconds after you execute the first command. If you prefer a different interval, enter that value in seconds instead.

You can also set the timeout to “-1,” which gives you an infinite grace period. Don’t do that. It’s a handy way to accidentally nuke your system one day.

4. Press Ctrl + o to save and Ctrl + x to exit.

Every sudoers file will have the following line:

This permits the

If you want to add another user as root, simply copy the root line and change the user like so:

For more control, you could add a line like the following, which would only permit the “alexander” user to run apt-get update.

Put a “%” in front of the user, and it will define a group. The line below would allow every user in the group “admin” to have root-level permissions. This would be the group as defined by your OS permission groups.

For Ubuntu, you’ll want to run the Terminal command below:

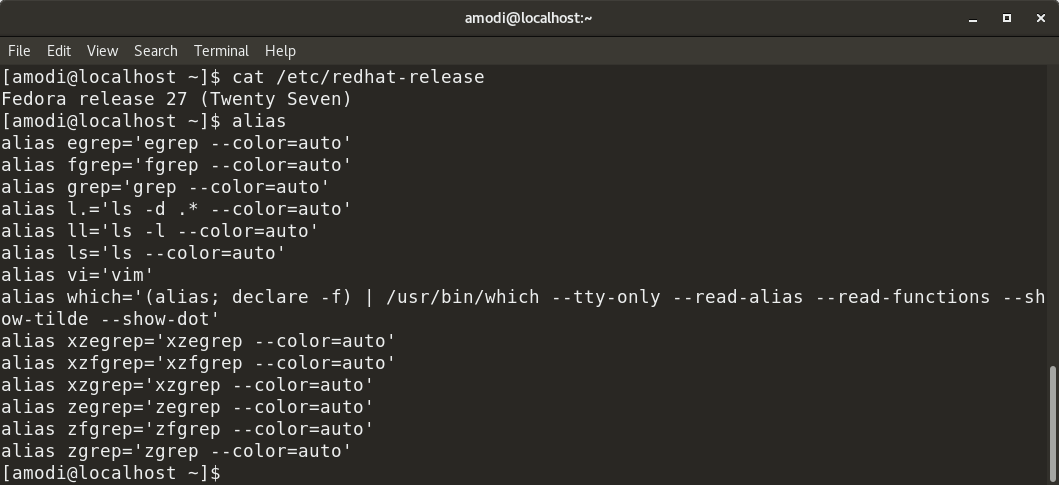

You’ll see something like the following:

If you wanted to select vim as your visudo editor from the default of nano, you would press its selection number

For other flavors of Linux, you’ll want to add a new line to your “~./bashrc” file as seen below:

Then save out the file. That would set your visudo editor to vim.

What is the sudoers file?

The sudoers file is a text file that lives at “/etc/sudoers.” It controls howsudo works on your machine. You are probably familiar with sudo’s primary role of elevating your current account’s privileges to root, the superuser on all Unix-based systems. This permits your users to execute commands that would be otherwise prohibited.When do I need to edit the sudoers file?

When you first install Linux (or macOS), the first and default user will be auto-added to the sudoers file so it can run administrative tasks with thesudo command. However, if you create a new user account, it will not have the superuser permission by default. If you need to grant it superuser permission, you will need to edit the sudoers file and add this user account to it.How can I edit sudoers?

Never edit the sudoers file in a normal text editor. This can lead to simultaneous editing and corrupted files, potentially denying any admin access. Sudoers must be edited by runningvisudo in Terminal, like so:sudo visudo

Note that you need to use

sudo to run visudo. This will open the sudoers file in the default text editor in Terminal (by default, nano).

What can changing the sudoers file do?

The sudoers file’s main job is defining which users can use sudo for what. It also holds some simple preferences, which we can adjust first to get a feel for how visudo works.Change the sudo timeout

By default, entering your sudo password elevates your permissions until you close the shell or exit. This can be insecure, and some might prefer entering their password each time they use sudo.1. Run

sudo visudo as mentioned above.2. Press Alt + / to navigate to the end of the document. If you are using Vi or Vim, press Shift + G instead.

3. Create a new line at the bottom of the document and add the following line:

Defaults timestamp_timeout=0

This will set your sudo timeout to zero seconds, so you’ll have sudo permissions for zero seconds after you execute the first command. If you prefer a different interval, enter that value in seconds instead.

You can also set the timeout to “-1,” which gives you an infinite grace period. Don’t do that. It’s a handy way to accidentally nuke your system one day.

4. Press Ctrl + o to save and Ctrl + x to exit.

Limit who can use sudo and for what

The main purpose of the sudoers file is to control which users can run sudo. Without sudo, users can’t elevate their permissions. If you have multiple users accessing the same system through shells, you can control their access by setting values in sudo.Every sudoers file will have the following line:

root ALL=(ALL) ALL

root user on ALL hosts using ALL users to execute ALL commands. ALL is a special value in the sudoers file meaning “no restrictions.” The syntax is as below:username hostlist = (userlist) commandlist

alexander ALL=(ALL) ALL

alexander ALL=(ALL)/usr/bin/apt-get update

%admin ALL=(ALL) ALL

Change the visudo editor

Depending on what version of Linux you’re running, there are two primary ways to change the editor.For Ubuntu, you’ll want to run the Terminal command below:

sudo update-alternatives –config editorThere are 4 choices for the alternative editor (providing /usr/bin/editor).

Selection Path Priority Status

------------------------------------------------------------

*0/bin/nano40 auto mode

1/bin/ed-100 manual mode

2/bin/nano40 manual mode

3/usr/bin/vim.basic 30 manual mode

4/usr/bin/vim.tiny 10 manual mode

Press enter to keep the current choice[*], or type selection number: 3

3 then press Enter.For other flavors of Linux, you’ll want to add a new line to your “~./bashrc” file as seen below:

exportEDITOR="vim"