http://www.linuxjournal.com/content/switching-monitor-profiles

It's funny, when your home office is your couch, you tend to forget how nice it can be when you dock a laptop and have all the extra screen real estate a monitor brings. For many years, I left my work laptop docked at work, and when I worked from home, I just VPNed in with a personal laptop. Lately though, I've recognized the benefits of splitting personal life and work, so I've taken to carrying my laptop with me when I go to and from the office. Because we invested in a docking station, it's relatively simple to transition between a laptop on my lap and a laptop on a desk with an extra monitor—except for one little thing: my external monitor is in portrait mode.

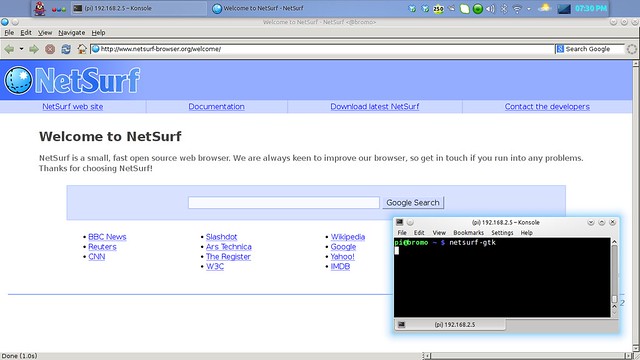

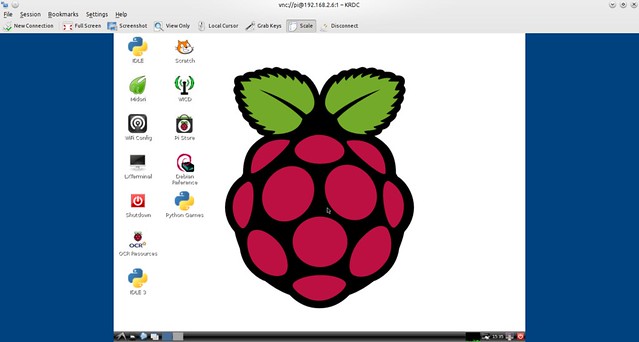

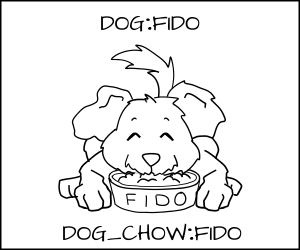

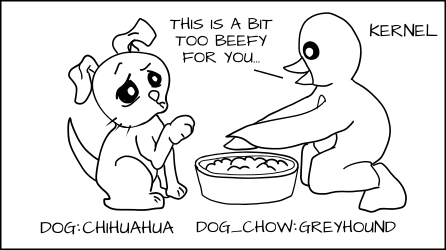

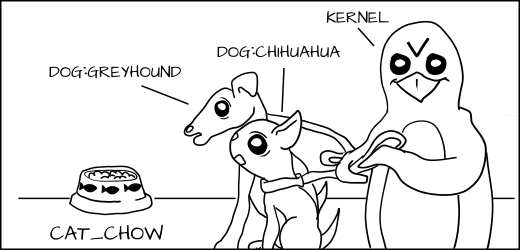

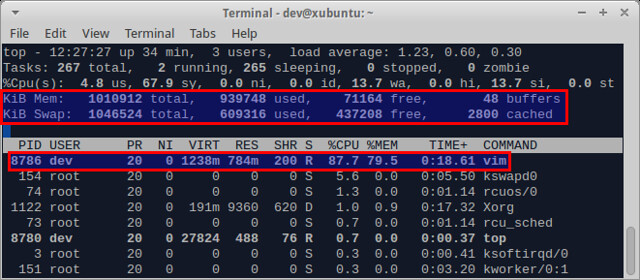

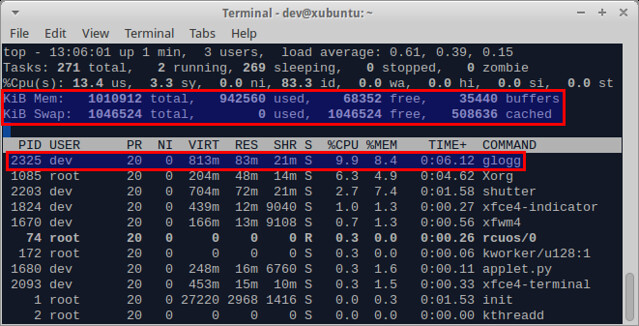

It must have been about two years ago that I started favoring widescreen monitors in portrait mode (Figure 1). Really, all I need to get work done is a Web browser and a few terminals, and I found if I keep the Web browser on the laptop screen, I can fit a nice large screen session or two in all the vertical space of a portrait-mode monitor. This makes reading man pages and other documentation nice, plus I always can split my screens vertically if I need to compare the contents of two terminals (see my "Do the Splits" column in the September 2008 issue for more information on how to do that: http://www.linuxjournal.com/article/10159). The only problem with portrait mode is that all the GUI monitor configuration tools tend not to handle portrait-mode monitors well, particularly if you want to combine them with a landscape-mode laptop screen. So, I found I needed to run a special xrandr command to set up the monitor and make sure it lined up correctly with my laptop screen. Plus, every time I transition between docked and undocked modes, I need to move my terminal windows from the large portrait-mode monitor over to a second desktop on my laptop screen. This all seemed like something a script could figure out for me, so in this article, I explain the script I use to transition from docked to undocked mode.

![]() Figure 1. Kyle's Current Desktop Setup

Figure 1. Kyle's Current Desktop Setup

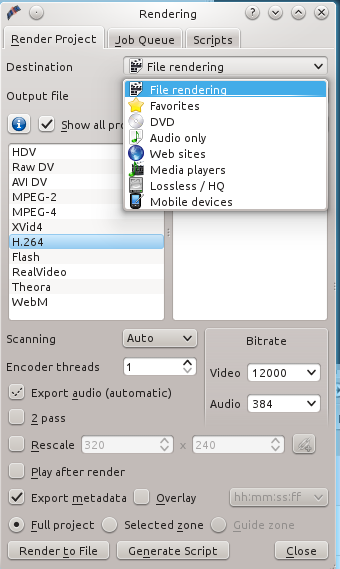

Basically, my script needs to do two things when it's run. First, it needs to run the appropriate xrandr command to enable or disable the external display, and second, it needs to reset all of my windows to their default location. Although I could just have one script I run when I'm docked and another when I'm undocked, I can find out my state from the system itself, so I can keep everything within one script. I've set up a script like this on my last two work-provided laptops and on the ThinkPad X220, I was able to use a /sys file to gauge the state of the dock:

Now that I can detect whether I'm docked, I should do something about it. The first thing I need to do is to enable output on my external monitor (HDMI3), tell xrandr that it's to the right of my laptop screen, and set it to portrait mode by telling xrandr to rotate it left:

It's funny, when your home office is your couch, you tend to forget how nice it can be when you dock a laptop and have all the extra screen real estate a monitor brings. For many years, I left my work laptop docked at work, and when I worked from home, I just VPNed in with a personal laptop. Lately though, I've recognized the benefits of splitting personal life and work, so I've taken to carrying my laptop with me when I go to and from the office. Because we invested in a docking station, it's relatively simple to transition between a laptop on my lap and a laptop on a desk with an extra monitor—except for one little thing: my external monitor is in portrait mode.

It must have been about two years ago that I started favoring widescreen monitors in portrait mode (Figure 1). Really, all I need to get work done is a Web browser and a few terminals, and I found if I keep the Web browser on the laptop screen, I can fit a nice large screen session or two in all the vertical space of a portrait-mode monitor. This makes reading man pages and other documentation nice, plus I always can split my screens vertically if I need to compare the contents of two terminals (see my "Do the Splits" column in the September 2008 issue for more information on how to do that: http://www.linuxjournal.com/article/10159). The only problem with portrait mode is that all the GUI monitor configuration tools tend not to handle portrait-mode monitors well, particularly if you want to combine them with a landscape-mode laptop screen. So, I found I needed to run a special xrandr command to set up the monitor and make sure it lined up correctly with my laptop screen. Plus, every time I transition between docked and undocked modes, I need to move my terminal windows from the large portrait-mode monitor over to a second desktop on my laptop screen. This all seemed like something a script could figure out for me, so in this article, I explain the script I use to transition from docked to undocked mode.

Figure 1. Kyle's Current Desktop Setup

Figure 1. Kyle's Current Desktop Setup Basically, my script needs to do two things when it's run. First, it needs to run the appropriate xrandr command to enable or disable the external display, and second, it needs to reset all of my windows to their default location. Although I could just have one script I run when I'm docked and another when I'm undocked, I can find out my state from the system itself, so I can keep everything within one script. I've set up a script like this on my last two work-provided laptops and on the ThinkPad X220, I was able to use a /sys file to gauge the state of the dock:

#!/bin/bash

DOCKED=$(cat /sys/devices/platform/dock.0/docked)

case "$DOCKED" in

"0")

echo undocked

;;

"1")

echo docked

;;

esac

$ xrandr

Screen 0: minimum 320 x 200, current 1366 x 768, maximum 8192 x 8192

LVDS1 connected 1366x768+0+0 (normal left inverted right x axis y axis)

↪277mm x 156mm

1366x768 60.0*+

1360x768 59.8 60.0

1024x768 60.0

800x600 60.3 56.2

640x480 59.9

VGA1 disconnected (normal left inverted right x axis y axis)

HDMI1 disconnected (normal left inverted right x axis y axis)

DP1 disconnected (normal left inverted right x axis y axis)

HDMI2 disconnected (normal left inverted right x axis y axis)

HDMI3 disconnected (normal left inverted right x axis y axis)

DP2 disconnected (normal left inverted right x axis y axis)

DP3 disconnected (normal left inverted right x axis y axis)

#!/bin/bash

xrandr | grep -q "HDMI3 disconnected"

case "$?" in

"0")

echo undocked

;;

"1")

echo docked

;;

esac

Now that I can detect whether I'm docked, I should do something about it. The first thing I need to do is to enable output on my external monitor (HDMI3), tell xrandr that it's to the right of my laptop screen, and set it to portrait mode by telling xrandr to rotate it left:

/usr/bin/xrandr --output HDMI3 --auto --right-of LVDS1 --rotate left

/usr/bin/xrandr --output LVDS1 --pos 0x1152

/usr/bin/xrandr --output HDMI3 --off

/usr/bin/xrandr --output LVDS1 --pos 0x0

#!/bin/bash

xrandr | grep -q "HDMI3 disconnected"

case "$?" in

"0") # undocked

/usr/bin/xrandr --output HDMI3 --off

/usr/bin/xrandr --output LVDS1 --pos 0x0

;;

"1") # docked

/usr/bin/xrandr --output HDMI3 --auto --right-of LVDS1

↪--rotate left

/usr/bin/xrandr --output LVDS1 --pos 0x1152

;;

esac

#!/bin/bash

xrandr | grep -q "HDMI3 disconnected"

case "$?" in

"0") # undocked

wmctrl -r 'kyle-ThinkPad-X230' -t 1

wmctrl -r 'kyle-ThinkPad-X230' -e '0,2,24,1362,362'

wmctrl -r snowball -t 1

wmctrl -r snowball -e '0,2,410,1362,328'

;;

"1") # docked

wmctrl -r 'kyle-ThinkPad-X230' -t 0

wmctrl -r 'kyle-ThinkPad-X230' -e '0,1368,0,1080,1365'

wmctrl -r snowball -t 0

wmctrl -r snowball -e '0,1368,1387,1080,512'

;;

esac

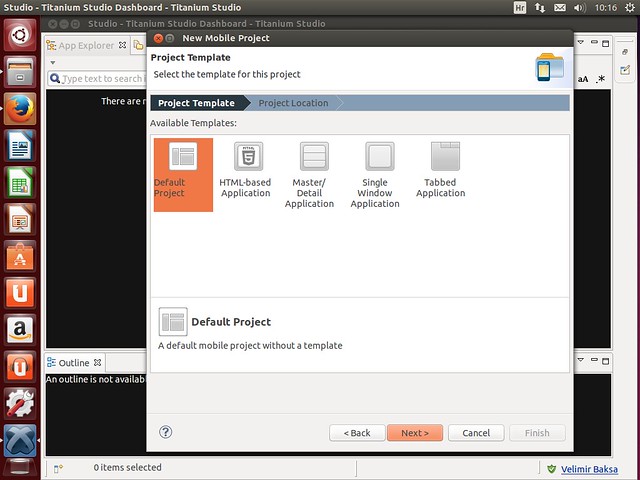

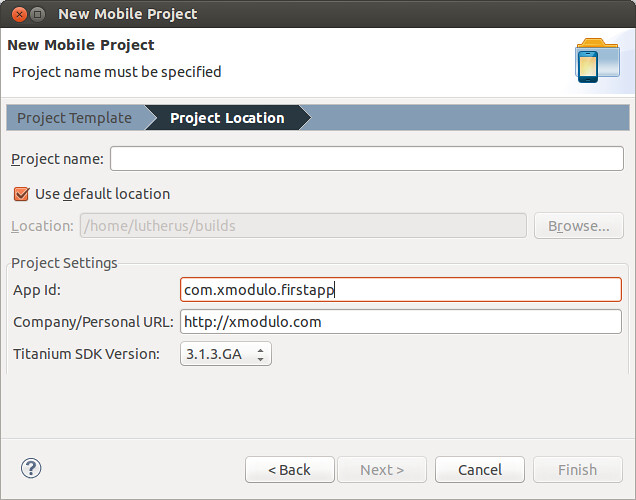

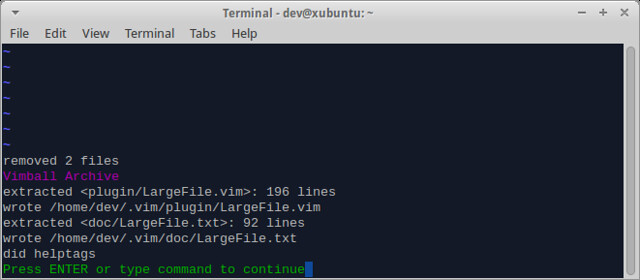

The main page for a running Weinre instance, ready for clients to connect to it.

The main page for a running Weinre instance, ready for clients to connect to it. A remote app has connected to a Weinre server.

A remote app has connected to a Weinre server. The Console tab shows all console output, and also lets you work with JavaScript.

The Console tab shows all console output, and also lets you work with JavaScript. The Elements tab lets you dynamically inspect your web page.

The Elements tab lets you dynamically inspect your web page. PhoneGap also provides its own Weinre instance if you don't want or have one of your own.

PhoneGap also provides its own Weinre instance if you don't want or have one of your own. You can configure the PhoneGap Build Service to enable debugging on its own.

You can configure the PhoneGap Build Service to enable debugging on its own.

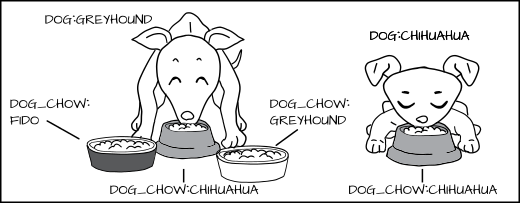

Figure 1. Boot Sequence

Figure 1. Boot Sequence