http://blog.getcrane.com/10-open-source-docker-tools-you-should-be-using

![Crane-Website-Blog-Post]()

You may have heard of this thing called Docker. You know, the one which has fostered over 20,000 open source projects (including orchestration tools and management frameworks) and over 85,000 Dockerized applications?

But Docker is much more than an application platform.

In fact, the Open Source community offers a ton of tools that augment Docker that can be hugely valuable if you’re getting started. So we decided to round up the most useful open source Docker tools so that you can be sure that your business is taking advantage of all that Docker has to offer.

And for even more Docker development tools and tips you should know, you can subscribe to our weekly roundup of Docker news articles here.

Google initially developed Kubernetes to help manage its own LXC containers - even before Docker! Kubernetes is great at managing workloads for Docker nodes and balancing container deployments across a cluster. Check it out - it provides ways for containers to communicate with each other without having to open network ports.

![Kubernetes]()

Best of all, Google has open-sourced Kubernetes. If you haven’t already tried Kubernetes, follow the steps in the Kubernetes Github Getting Started guides to get up and running. Keep in mind that Kubernetes is an industrial strength Docker orchestration tool, with accompanying industrial strength complexity, so you’d be advised to keep your use cases quite simple at first.

Watch Kubernetes on Github:

![dockersh-and-a-brief-intro-to-the-docker-internals-11-638]()

With the obvious worries over security that come with giving users shell access, the jury is definitely out over whether you would want to run Dockersh in production.

Even Yelp themselves do not use dockersh in production, but the concept demonstrates how the developer ecosystem is innovating with Docker, and the project remains one to watch.

Watch Dockersh on Github:

Soundcloud adopted a microservices architecture when it became clear that the monolithic Ruby on Rails application that it had first built in 2007 could not perform reliably under web scale traffic.

![promdash_event_processor-1b82c8fbde0e1e14a1cae7dec0fef29b]()

With a microservices architecture, interrelated processes are divided into smaller logic domains. As an application scales up, new processes are spawned, rather than entire blocks of functionality.

However, when you're running hundreds or even thousands of microservices, its difficult to monitor the statuses of aggregate jobs. Because of this, you lose visibility of where bottlenecks are occurring and how to fix them.

Prometheus is aimed at solving this problem. Here's an overview of how Prometheus can help you, straight from the Soundcloud team.

Watch Prometheus on Github:

![B4BlZr6IMAAhAKx]()

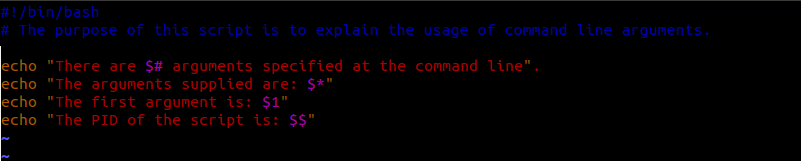

If you have yet to try it, here's how it works: after you've written your Dockerfile, you write a simple

Its worth bearing in mind that Docker Compose (previously known as Fig) does not support remote orchestration, meaning that it is only possible to orchestrate an infrastructure on a single host. If you want to scale an app to multiple servers, you will need more involved tools such as Kubernetes.

Watch Docker Compose on Github:

![empty-images.027c]()

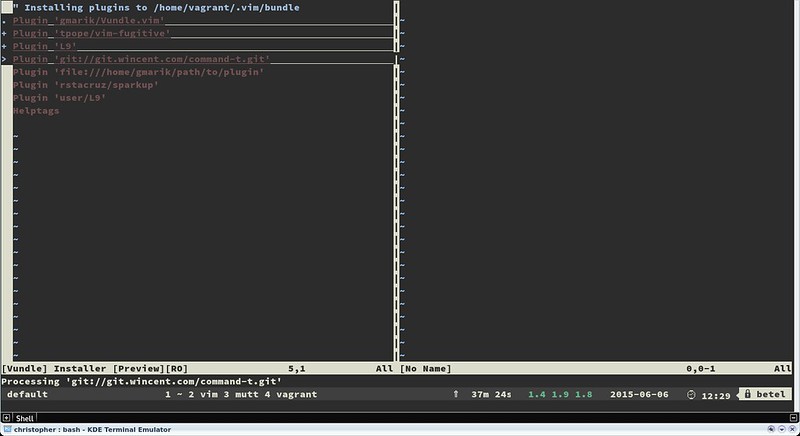

Kitematic, now acquired by Docker, can also help you access environment variables, container logs, provide shell access to containers and restart containers. Keep in mind that Kitematic is still evolving as a project, so one or two breaking fixes have occurred in its history - as well as conflicts with other tools.

Watch Kitematic on Github:

![2fbde119-22fc-e745-33c8-57ed6a2528e4]()

Logspout is currently limited to

The introduction of structured header data, planned for a future release, will allow Logspout to be integrated with tools such as Logentries.

Watch Logspout on Github:

![flocker-architecture-diagram]()

Thinking about bringing Docker into production but really want a way of managing your data in a containerized way? Give Flocker a try.

Watch Flocker on Github:

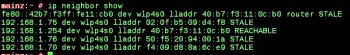

Weave is a container-specific implementation of software-defined networking across data centers, and caters mainly to network admins looking to deploy complex applications that span multiple servers.

Since its launch last September, it's become one of the most popular Docker-related projects on Github.

Watch Weave on Github:

For instance, you can run the distributed data application Crate.io alongside Flocker, Weave and Powerstrip. This is great if you want to run multiple services together without wrapping the Docker API.

Watch Powerstrip on Github:

![heliospic]()

One of the killer features of Helios is the ability to recognize when a "container is dead." If someone accidentally shuts down a mission critical container, Helios can be figured to recognize and instantly load one back up.

Although there are now other orchestration frameworks around, Helios has established a strong track record for operating at scale, and is one of the most watched projects on Github.

Are you taking advantage of these essential open source Docker tools?

You may have heard of this thing called Docker. You know, the one which has fostered over 20,000 open source projects (including orchestration tools and management frameworks) and over 85,000 Dockerized applications?

But Docker is much more than an application platform.

In fact, the Open Source community offers a ton of tools that augment Docker that can be hugely valuable if you’re getting started. So we decided to round up the most useful open source Docker tools so that you can be sure that your business is taking advantage of all that Docker has to offer.

And for even more Docker development tools and tips you should know, you can subscribe to our weekly roundup of Docker news articles here.

10 Helpful Docker Tools For Developers

1) Kubernetes

Want the Swiss Army knife approach to Docker orchestration? If so, Google’s “Kubernetes” is your friend.Google initially developed Kubernetes to help manage its own LXC containers - even before Docker! Kubernetes is great at managing workloads for Docker nodes and balancing container deployments across a cluster. Check it out - it provides ways for containers to communicate with each other without having to open network ports.

Best of all, Google has open-sourced Kubernetes. If you haven’t already tried Kubernetes, follow the steps in the Kubernetes Github Getting Started guides to get up and running. Keep in mind that Kubernetes is an industrial strength Docker orchestration tool, with accompanying industrial strength complexity, so you’d be advised to keep your use cases quite simple at first.

Watch Kubernetes on Github:

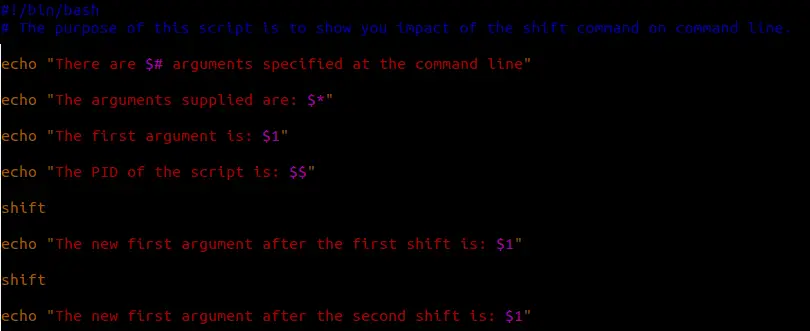

2) Dockersh

Developed by Yelp as part of its testing and service management infrastructure suite, Dockersh works by allowing each user to have their own individual Docker container (a bit like a lightweight VM). Users are able to see their own home directory and make changes to it, but they can only see their own processes and have their own network stack.

With the obvious worries over security that come with giving users shell access, the jury is definitely out over whether you would want to run Dockersh in production.

Even Yelp themselves do not use dockersh in production, but the concept demonstrates how the developer ecosystem is innovating with Docker, and the project remains one to watch.

Watch Dockersh on Github:

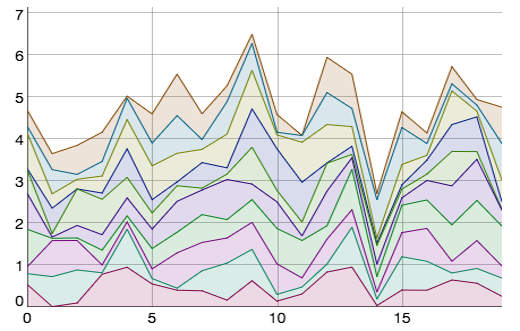

3) Prometheus

Want better insight into how healthy your microservices are? Just run Soundcloud's open source monitoring tool, Prometheus.Soundcloud adopted a microservices architecture when it became clear that the monolithic Ruby on Rails application that it had first built in 2007 could not perform reliably under web scale traffic.

With a microservices architecture, interrelated processes are divided into smaller logic domains. As an application scales up, new processes are spawned, rather than entire blocks of functionality.

However, when you're running hundreds or even thousands of microservices, its difficult to monitor the statuses of aggregate jobs. Because of this, you lose visibility of where bottlenecks are occurring and how to fix them.

Prometheus is aimed at solving this problem. Here's an overview of how Prometheus can help you, straight from the Soundcloud team.

Watch Prometheus on Github:

4) Docker Compose

If you want to define and run complex applications using Docker, it might make sense to use a tool like Docker Compose, Docker's orchestration product.

If you have yet to try it, here's how it works: after you've written your Dockerfile, you write a simple

docker-compose.yml file that lists the Docker containers your app needs, and then run docker-compose up. Compose will then start and run your app.Its worth bearing in mind that Docker Compose (previously known as Fig) does not support remote orchestration, meaning that it is only possible to orchestrate an infrastructure on a single host. If you want to scale an app to multiple servers, you will need more involved tools such as Kubernetes.

Watch Docker Compose on Github:

5) Kitematic

If you're looking for a desktop developer environment for using Docker on Mac OS X, you'll want to take a look at Kitematic. Kitematic helps you run Docker images, spin them up and manage them in the same way as you would in VMWare Workstation.

Kitematic, now acquired by Docker, can also help you access environment variables, container logs, provide shell access to containers and restart containers. Keep in mind that Kitematic is still evolving as a project, so one or two breaking fixes have occurred in its history - as well as conflicts with other tools.

Watch Kitematic on Github:

6) Logspout

In addition to Kitematic, Logspout can be a great tool for helping you manage logs generated by programs running inside Docker containers. It enables you to route container-app logs to a single location, such as a JSON object or a streamed endpoint available over HTTP.

Logspout is currently limited to

stdout and stderr because of Docker's logging API, but with more hooks planned in time, this will likely increase.The introduction of structured header data, planned for a future release, will allow Logspout to be integrated with tools such as Logentries.

Watch Logspout on Github:

7) Flocker

Flocker is an enterprise-grade data volume manager and multi-host cluster management tool - and what's more, it's free. If you've tried to build a production system with containers, you've probably run into the "stateful" problem. What do you do about the stateful parts of the app - areas such as backups, disaster recovery and compliance - which typically occur outside of the Docker environment.

Thinking about bringing Docker into production but really want a way of managing your data in a containerized way? Give Flocker a try.

Watch Flocker on Github:

8) Weave

Looking to connect Docker containers on a virtual network across many data center environments? Then you'll be interested in WeaveWeave is a container-specific implementation of software-defined networking across data centers, and caters mainly to network admins looking to deploy complex applications that span multiple servers.

Since its launch last September, it's become one of the most popular Docker-related projects on Github.

Watch Weave on Github:

9) Powerstrip

Powerstrip enables you to build prototypes of Docker extensions. Once set up, you can compose adapters such as Flocker and Weave alongside your chosen orchestration framework, without having to rebuild Docker each time.For instance, you can run the distributed data application Crate.io alongside Flocker, Weave and Powerstrip. This is great if you want to run multiple services together without wrapping the Docker API.

Watch Powerstrip on Github:

10) Helios

As enterprises are looking to run large volumes of containerized backend services in production, orchestration frameworks such as Helios will become critical. Developed internally as Docker was beginning to gain traction, Spotify use Helios to ensure that hundreds of microservices across several thousand servers work as they should.

One of the killer features of Helios is the ability to recognize when a "container is dead." If someone accidentally shuts down a mission critical container, Helios can be figured to recognize and instantly load one back up.

Although there are now other orchestration frameworks around, Helios has established a strong track record for operating at scale, and is one of the most watched projects on Github.

Figure 1. A visualization of Linux running-bare metal (a) and under the Jailhouse hypervisor (b) alongside a real-time application. (Image from Yulia Sinitsyna; Tux image from Larry Ewing.)

Figure 1. A visualization of Linux running-bare metal (a) and under the Jailhouse hypervisor (b) alongside a real-time application. (Image from Yulia Sinitsyna; Tux image from Larry Ewing.)  Figure 2. A typical configuration issue: Jailhouse traps "prohibited" operation from the root cell.

Figure 2. A typical configuration issue: Jailhouse traps "prohibited" operation from the root cell.  Figure 3. Jailhouse cell listing—the same information is available through the sysfs interface.

Figure 3. Jailhouse cell listing—the same information is available through the sysfs interface.  Figure 4. Jailhouse cell statistics give an insight into how cells communicate with the hypervisor.

Figure 4. Jailhouse cell statistics give an insight into how cells communicate with the hypervisor.  Figure a. A must-have toolkit to run Jailhouse bare metal: serial-to-USB converter, null modem cable (attached) and mountable COM port. (Image from Yulia Sinitsyna.)

Figure a. A must-have toolkit to run Jailhouse bare metal: serial-to-USB converter, null modem cable (attached) and mountable COM port. (Image from Yulia Sinitsyna.)

Figure 1. Block Diagram of the Hardware System

Figure 1. Block Diagram of the Hardware System  Figure 2. The RPi's GPIO Pins

Figure 2. The RPi's GPIO Pins  Figure 3. The Hardware Setup

Figure 3. The Hardware Setup  Figure 4. Block Diagram of the System

Figure 4. Block Diagram of the System

Figure 1. Recommended Hardware Setup for a Cluster Running LUCI4HPC

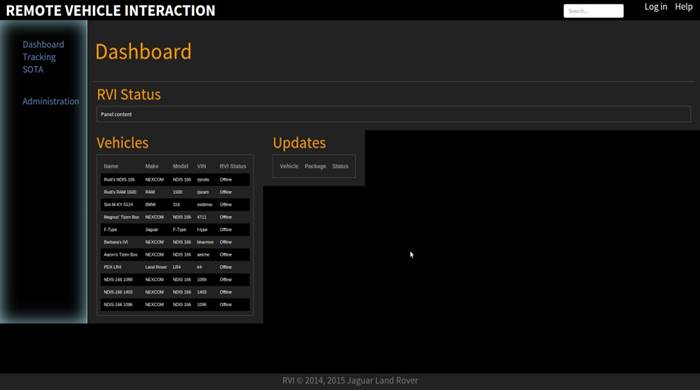

Figure 1. Recommended Hardware Setup for a Cluster Running LUCI4HPC  Figure 2. LUCI4HPC Web-Based Control Panel, Cluster Overview Page

Figure 2. LUCI4HPC Web-Based Control Panel, Cluster Overview Page